Introduction

Imagine 1983: you’re hammering commands into a CRT terminal, green glow burning your retinas as a sentient machine hums back. Now crank it to 2025, and I’m unleashing HAL—a feral AI assistant that’s half WarGames fever dream, half tech apocalypse. This isn’t some tame chatbot; it’s a knowledge-devouring beast, gorging on 1.21 gigawatts of technical docs—compilers, algorithms, systems lore—spitting out answers faster than a supercomputer on a caffeine bender. Built with Retrieval-Augmented Generation (RAG), HAL’s brain is a swirling vortex of pure data, streaming replies like a teletype possessed. The UI? A retro terminal so slick it’d make WOPR short-circuit with envy. This is HAL’s genesis—my lunatic quest to forge an AI sharper than a plasma cutter and cooler than a mainframe in a snowstorm. Buckle up; we’re going full throttle.

Why HAL? The Vision

Why HAL? It kicked off with a restless itch. Years of wrestling with technical docs—digging through dense PDFs and sprawling manuals—left me hungry for something sharper, something that didn’t make me feel like a scavenger.

I’d already built Complect, a toy compiler in Node.js, to crack open the black box of code transformation (check that story here). But HAL’s different—I wanted an AI that doesn’t just chew on code but swallows whole libraries of knowledge: compilers, algorithms, systems design. Picture a trusted co-conspirator handing me answers on a platter.

HAL’s mission is bold yet clean-cut: fast, personalized tech insights from a towering stack of documents, dialed into my style and needs. It’s about streaming precise responses quicker than I can flip to a table of contents—gorged on gigabytes of tech lore and ready to roll.

Speed’s just the start. HAL’s built to feel alive—a companion that picks up my quirks (like “butter” as my go-to slang) and slices through the noise. This isn’t some lifeless search bar; it’s an AI with a pulse, here to tame the chaos of info overload and level up my coding grind.

Tech Choices: The Stack

HAL’s built on a stack that’s all about power, speed, and a touch of retro flair. I didn’t just throw tools together—this is a calculated lineup to tackle mountains of tech docs and stream answers like a pro. Let’s break it down, layer by layer, and see why each piece clicked into place.

Python: The Glue

I went with Python 3.12.9 to run the show. Why? It’s my go-to for flexibility—whether I’m wrangling AI, piping data, or debugging late-night ideas, Python’s ecosystem has my back. Paired with uv for dependency management, it keeps HAL’s guts clean and humming on WSL Ubuntu 22.04—a solid Linux base that plays nice with my dev flow.

FastAPI: Real-Time Backbone

For the API layer, FastAPI was a no-brainer. This async beast serves up WebSocket streaming and endpoints with ruthless efficiency—think low-latency replies that keep HAL feeling snappy. It’s handling the chatter between the UI and the core, and it’s lean enough to scale for multi-user support.

vLLM: The Language Engine

The brainpower comes from vLLM, driving meta-llama/Llama-3.2-3B-Instruct. This lightweight LLM fits my NVIDIA RTX 4080 (16GB VRAM, CUDA) like a glove—fast inference, CUDA acceleration, and just enough muscle to generate sharp answers without choking. My i9-13900KF (20 cores) and 128GB RAM keep it fed.

Qdrant: Vector Smarts

Storing HAL’s knowledge is Qdrant, a vector database that’s pure magic for retrieval. It indexes 1024-dimensional embeddings from thenlper/gte-large, letting me search millions of doc chunks in milliseconds with HNSW indexing. Qdrant’s the backbone of HAL’s brain, split into three collections that power its smarts—each one a piece of the puzzle.

Docs - The RAG Vault

The hal_docs collection is HAL’s treasure chest—packed with tech gold from books ingested via RAG. Think compilers, algorithms, Node.js deep dives, and systems design, shredded into highly optimized chunks. It’s the raw fuel for HAL’s answers, searchable in a blink thanks to those HNSW-tuned vectors.

History - Chat Memory

Then there’s hal_history, the session chatter keeper. Every query and response gets embedded here, tied to a convo ID, so HAL can riff off what we’ve talked about—within a session, at least (it resets on restart for now). It’s lightweight, fast, and gives HAL that short-term memory to feel like a real partner.

Facts - User Soul Map

The hal_facts collection is where HAL gets personal. This isn’t just dry data—it’s a growing stash of insights HAL picks up about me (or any user), like my love for “butter” as slang or my habit of asking about compiler quirks. Think of it as HAL’s sketchbook of user vibes—small now, but poised to drive tailored replies as it learns.

Ingestion: From PDFs to Chunks

To stuff Qdrant with knowledge, I tap pymupdf4llm. It tears PDFs into Markdown like a digital shredder, slicing them into chunks with ruthless precision. Then comes the magic: custom scoring rules, powered by spaCy, filter out the fluff—low-value noise doesn’t stand a chance. SentenceTransformer steps in to encode those chunks into embeddings, juiced up by CUDA acceleration for blazing speed. This pipeline rips through mountains of docs and turns raw PDFs into HAL’s brain food.

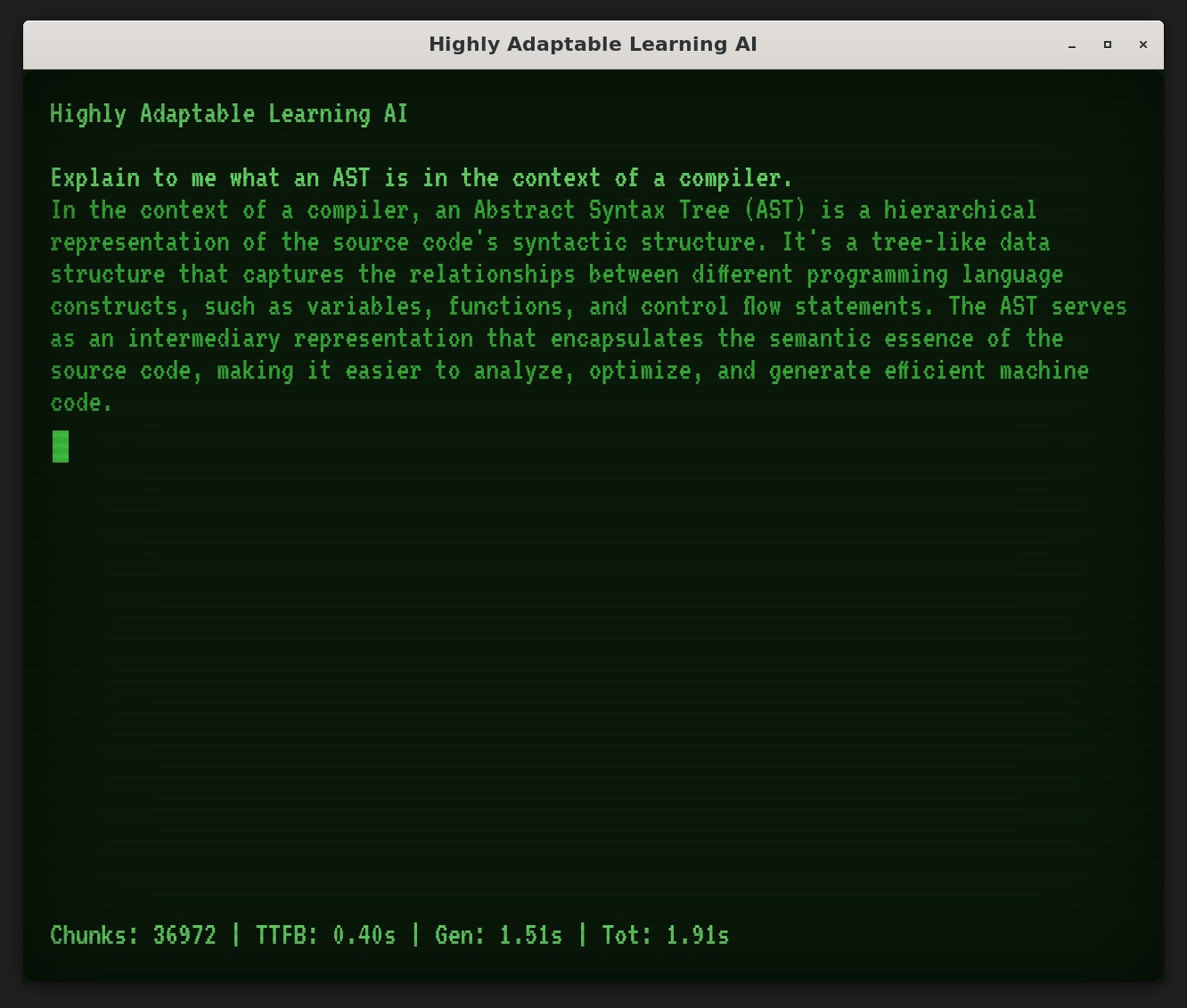

Tauri: Retro UI Magic

Up front, Tauri delivers the WarGames vibes in spades. This cross-platform framework wraps a JavaScript-driven UI that streams via WebSockets, drenched in a dripping-with-nostalgia CRT glow—green phosphor flickering, scanlines streaking across the screen. It’s not just a look; it’s a feel popping against that retro haze, every keystroke echoing like a teletype from 1983. Lightweight and snappy, Tauri keeps the system lean while letting me crank that classic terminal soul to eleven—HAL’s window into a coder’s time machine.

The Bet

This stack’s a bet on balance—raw compute from my RTX and i9, agility from Python and FastAPI, and smarts from vLLM and Qdrant. It’s tuned to make HAL a doc-crunching, answer-slinging machine, with a UI that’s as fun as it is functional.

Architectural Layers

HAL’s more than a clever trick—it’s a layered beast, built to chew through tech docs and spit out gold. I didn’t just slap this together; every piece fits to make HAL fast, smart, and alive. Here’s the high-level breakdown of its guts, a teaser for the flows that make it tick.

Ingestion

First up, the ingestion layer—where PDFs meet their maker. This is HAL’s feeder, ripping docs into bite-sized chunks and packing them into a knowledge vault. It’s a slick pipeline, tuned to shred and store, ready to fuel the next step.

Retrieval

Next, retrieval—HAL’s secret sauce. This layer digs through that vault with vector-powered smarts, snagging the juiciest chunks in a flash. It’s the bridge between raw data and real answers, primed for speed and precision.

Generation

Then comes generation—the beating heart. Here, HAL’s language model kicks in, weaving those chunks into replies that stream like a live feed. It’s where tech lore turns into tailored insights, fast enough to keep up with my grind.

UI

Finally, the UI layer—HAL’s green-glow face. This is the WarGames-style window where it all comes together, streaming answers through a CRT lens. It’s not just pretty; it’s the coder’s gateway to HAL’s soul.

The Big Picture

These layers mesh like clockwork—ingestion feeds retrieval, retrieval fuels generation, and the UI ties it all into a nostalgia-laced bow. Stick around; the flows below dive deeper into how HAL pulls this off.

Ingestion Flow

erDiagram

direction LR

PDFs ||--o{ Markdown : "Converted"

Markdown ||--o{ Chunks : "Parsed, Scored"

Chunks ||--o{ Embeddings : "Encoded"

Embeddings ||--o{ Qdrant : "Stored"

PDFs

Markdown

Chunks

Embeddings

Qdrant

HAL’s brain doesn’t grow on trees—it’s forged from a flood of PDFs, transformed into fuel through a relentless ingestion pipeline. This is where raw tech docs meet their destiny, shredded and shaped into HAL’s knowledge core. Here’s how it happens, step by step, with a diagram to seal the deal.

Step 1: PDF Rip

It starts with pymupdf4llm. This tool rips PDFs into Markdown like a digital chainsaw, pulling text from piles of tech tomes—compilers, algorithms, the works. No page left unturned, no detail spared.

Step 2: Chunk Magic

Next, the Markdown gets sliced into chunks—bite-sized pieces HAL can chew. Custom rules kick in here, powered by spaCy, to axe the fluff and keep the gold. It’s a ruthless culling—only the best make it through.

Step 3: Embedding Juice

Those chunks hit SentenceTransformer for the big encode. CUDA acceleration fires it up, turning text into 1024-dimensional embeddings—dense vectors that pack meaning into math. This is HAL’s brain food taking shape.

Step 4: Qdrant Vault

Finally, the embeddings land in Qdrant—the vault where HAL’s smarts live. Indexed with HNSW for lightning searches, collections like hal_docs (tech books) and hal_history (chat logs) get stacked and ready. It’s a clean handoff to the retrieval layer.

The Flow in Action

This pipeline’s a beast! Gigabytes of docs devoured in no time, from PDF to vector in one smooth rip.

Usage Flow

sequenceDiagram

actor U as User

participant UI as HAL UI

participant API as HAL API

participant Q as Qdrant

participant V as vLLM

participant E as External

U->>UI: Input Query

UI->>API: HTTP POST

API->>Q: Fetch Chunks

API->>E: Fetch GitHub/arXiv

API->>V: Generate

V-->>API: Streamed Text

API-->>UI: Response

UI-->>U: Display

HAL doesn’t sit idle—it’s a live wire, turning queries into answers with a slick, end-to-end dance. This is the runtime magic, where docs, memory, and AI collide to deliver the goods. Here’s how it rolls, from keystroke to green glow, with a diagram to trace the pulse.

Step 1: Query Kickoff

It starts with you—typing a question into the Tauri UI. That input fires off via WebSocket, hitting the FastAPI core with a lean HTTP POST. No lag, just a straight shot to HAL’s engine.

Step 2: Chunk Hunt

FastAPI hands off to Qdrant, where the retrieval layer springs into action. Vector searches snag the top chunks from hal_docs, hal_history, even fetches from GitHub and arXiv. It’s HAL’s memory flexing hard.

Step 3: Answer Brew

Those chunks feed vLLM (meta-llama/Llama-3.2-3B-Instruct), the generation powerhouse. CUDA kicks in, streaming text back through FastAPI as it forms—answers in seconds, tailored and sharp. This is HAL thinking on its feet.

Step 4: UI Glow

Back to Tauri, the response streams live—green phosphor text blooming across the CRT-style screen. You see it unfold, real-time, like a teletype with soul. Query in, answer out—done.

The Flow in Motion

This is HAL unleashed—query to reply in a heartbeat, weaving docs, memory, and AI into a seamless loop. The sequence below tracks every beat:

What’s Next?

HAL’s just getting warmed up—I’ve got plans to crank this beast to the next level. The core’s solid, but there’s more juice to squeeze from this stack. Here’s what’s brewing for the road ahead.

Multi-User Muscle

Imagine HAL juggling conversations like a seasoned maestro—multi-user support brings that to life. Picture distinct threads for each user, seamlessly tracked so HAL knows who’s who, whether it’s me or the whole team firing off queries. It’s a smooth unlock, letting HAL scale from solo act to crew companion with effortless precision.

External Knowledge Grab

HAL’s about to tap into a wild stream of external wisdom—think GitHub’s bustling repos, arXiv’s cutting-edge papers, and MDN’s web-dev goldmine. Picture live docs and abstracts flowing in, seamlessly woven into HAL’s answers, stretching its reach far beyond my local stash. It’s a knowledge boost that turns HAL into a global tech whisperer, pulling the best insights from the wild.

Personalization Punch

HAL’s set to get personal, building a living map of user facts to shape every chat. Imagine it soaking up details—my slang quirks, favorite tech tangents, little nuggets of who I am—keeping track so each reply feels like it’s just for me. It’s HAL dialing in, turning conversations into a custom fit, not a one-size-fits-all echo.

Agentic Edge

HAL’s eyeing an agentic upgrade—think a sidekick that doesn’t just talk tech but jumps into action. Picture it crafting Python snippets, whipping up emails, or digging through X on my behalf—all with a sharp, proactive edge. It’s HAL evolving from answer machine to trusted doer, ready to roll up its sleeves.

The Horizon

HAL’s upgrades—multi-user threads, external knowledge streams, personalized vibes, and agentic flair—are set to catapult it from a solo sidekick to a towering tech titan. Picture a future where HAL juggles crews, taps global wisdom, tailors every word, and leaps into action—all humming on a stack built for the long haul. This isn’t just a tool evolving; it’s a revolution unfolding—stay strapped in for a ride that’s only begun to roar.

Who knows, I may even add an amber mode.

Closing Thoughts

HAL’s not just a project—it’s a mission to tame the tech doc jungle and turn chaos into clarity. Building it matters because it’s my shot at bending AI to my will, delivering answers that hit hard and fast while feeling like a convo with a friend. The fun? Wrestling this stack into shape—watching Python, Qdrant, and Tauri click, seeing that green CRT glow come alive. It’s been a grind with a grin, and I’m nowhere near done. HAL’s a spark that’s lit something big—stick around, the fire’s just getting started.